Cooling Solutions for Modern HPC Facilities and Data Centers

10/29/2021 3:00 PM

Challenges of heat management

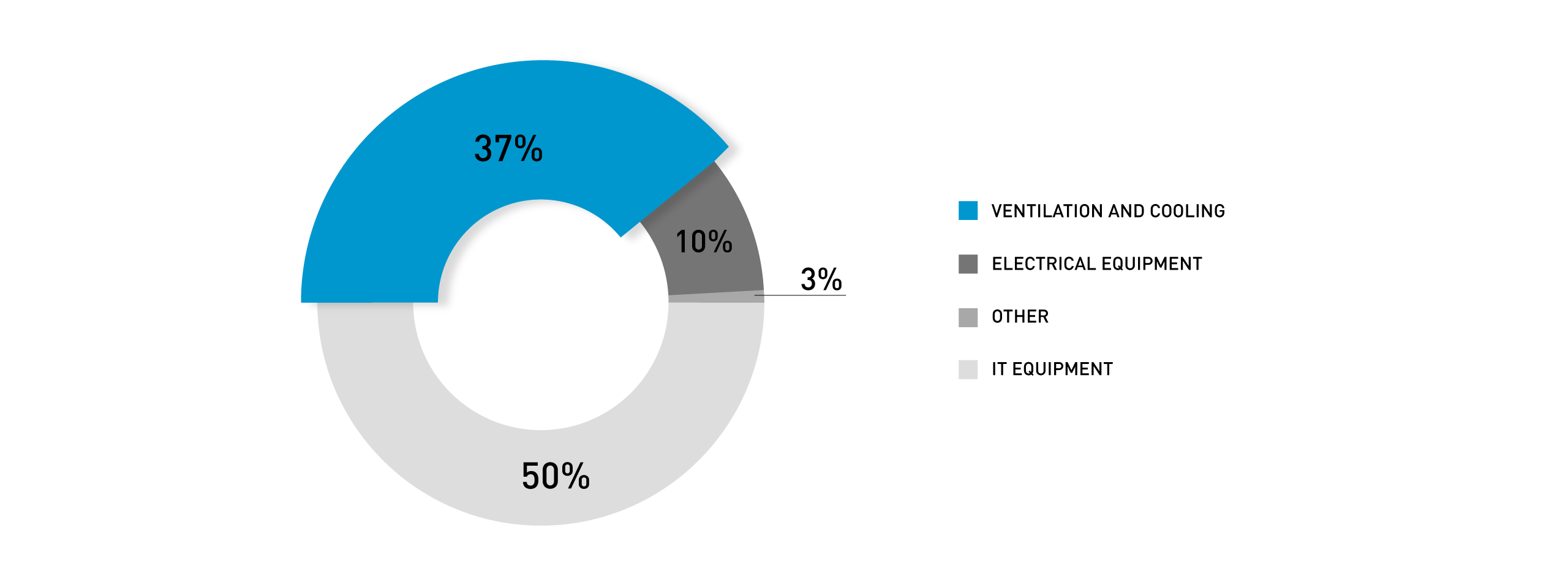

High Performance Computing (HPC) facilities that engage in the development of artificial intelligence and machine learning heavily leverage powerful GPUs and multithreaded CPUs. Data centers and colocation facilities rely on rows upon rows of server racks filled to the brim with numerous high-performance CPUs, petabytes of RAM, and extensive storage space. All this silicone, despite modern advancements in manufacturing and power efficiency, generates tremendous amounts of heat. So much in fact, that up to 40% of the total energy requirements of the facility is spent on keeping the IT equipment within acceptable operating temperatures.

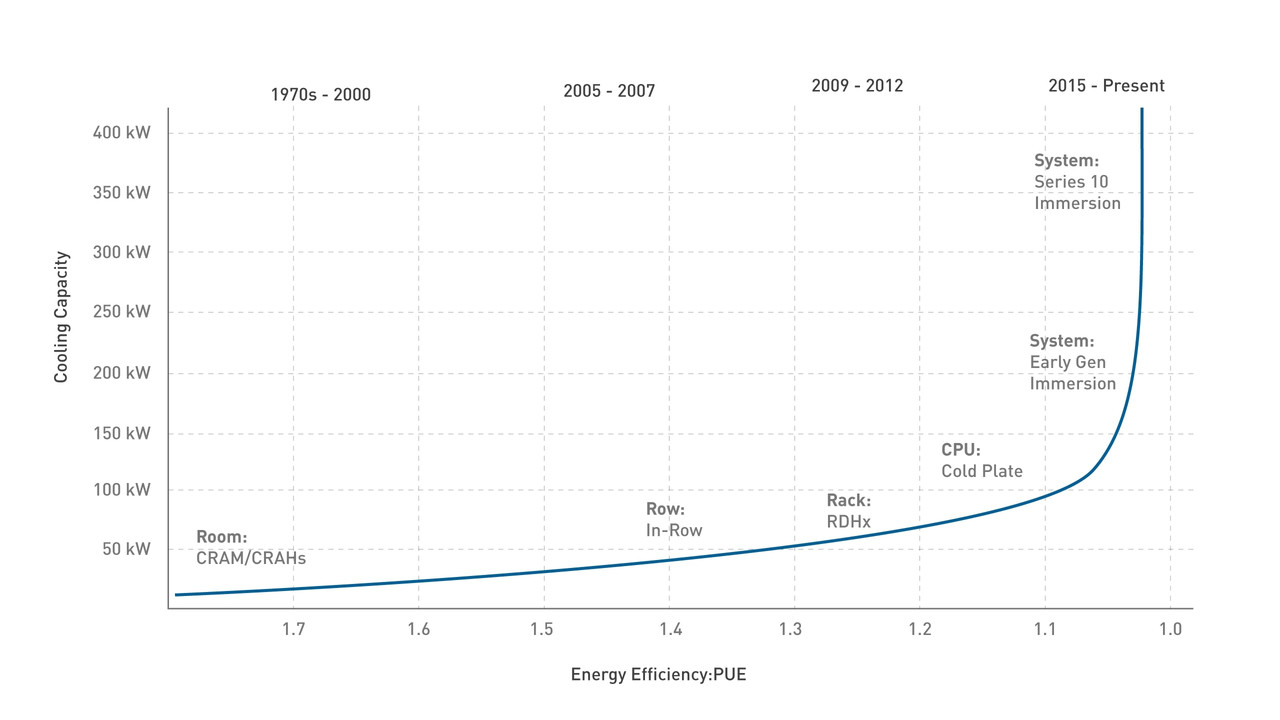

This is best illustrated by the Power Usage Efficiency (PUE) ratio that describes how efficiently a computer facility uses energy. More specifically, it shows how much energy is used by the IT equipment, in contrast to cooling and other overhead that supports the equipment. This isn’t a new problem, but it has been aggravated by recent initiatives for more efficient and economical cooling solutions.

Currently deployed and expected IT equipment heat generation

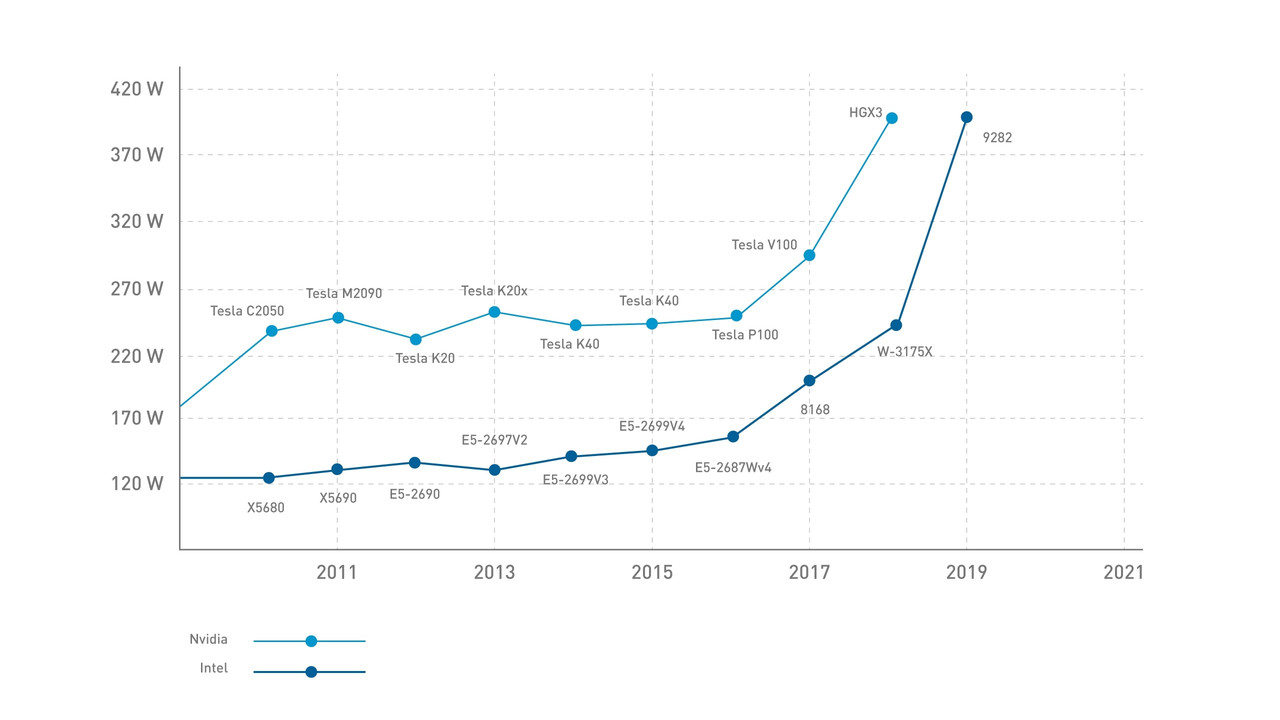

Current CPUs that are extensively used in the server market have a power consumption of around 200-280W. Within this range, air cooling can still handle the heat levels. However, anything higher than this renders air cooling virtually ineffective. On the other hand, GPUs are really pushing the boundaries of modern cooling solutions with designs that consume well above 400W, and with some models, like the Nvidia A100, they go as far as 500W per card.

The aforementioned 500W A100 model is only available and viable thanks to the use of liquid cooling in the server enclosures where it is deployed. And the predictions for future designs only forecast a steady growth in power consumption of microprocessors.

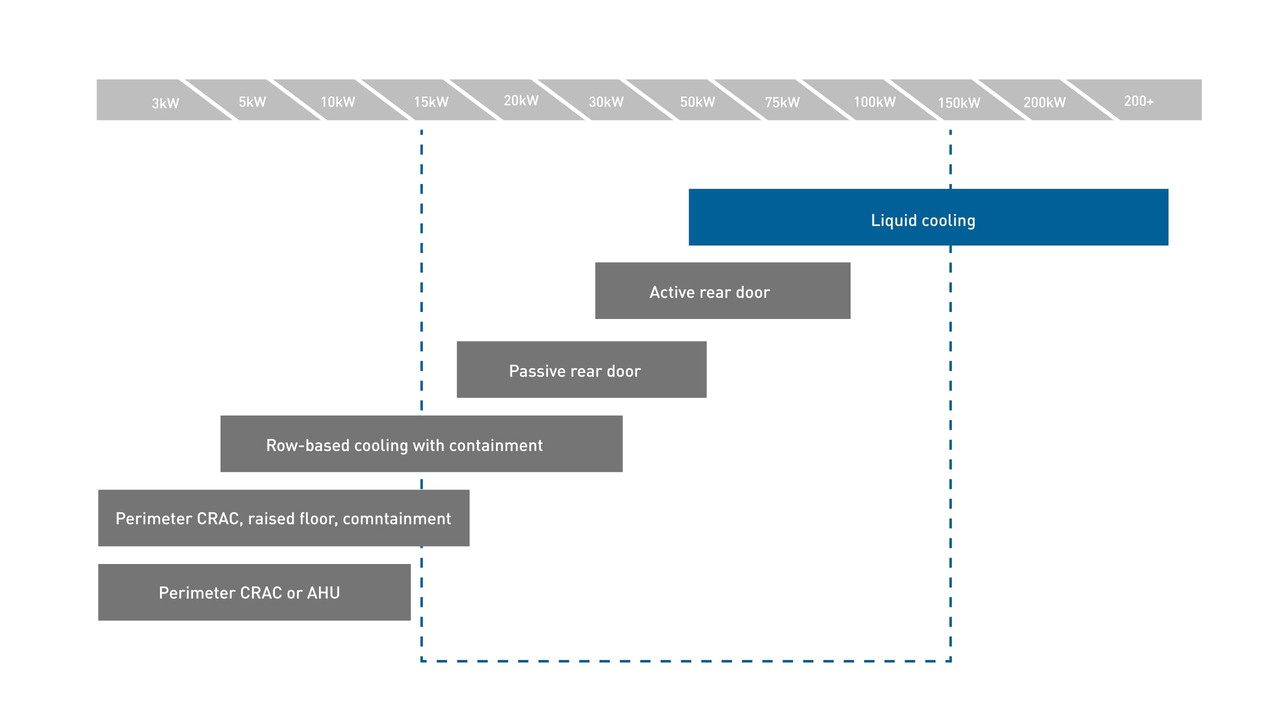

Current cooling solutions

The vast majority of HPC facilities still rely on air cooling. Servers use high-power fans and metal heatsinks to dissipate the heat generated by the computer components. This heated air needs to be cooled down using costly CRAH and CRAC systems, driving up total operating costs. This is a particular problem in areas of the world that observe ambient temperatures above 35˚C (95˚F) for extended periods. To combat this, we explored the full benefits and technology of liquid cooling in our previous blog.

There are several methods of liquid cooling:

- Direct-to-chip liquid cooling with rack Coolant Distribution Units (CDU)

- Direct-to-chip liquid cooling with in-rack heat rejection

- Direct-to-chip liquid cooling with chassis contained heat rejection

- Immersion liquid cooling

- Hybrid rear door air to liquid heat exchangers

Direct-to-chip liquid cooling with rack Coolant Distribution Units (CDU)

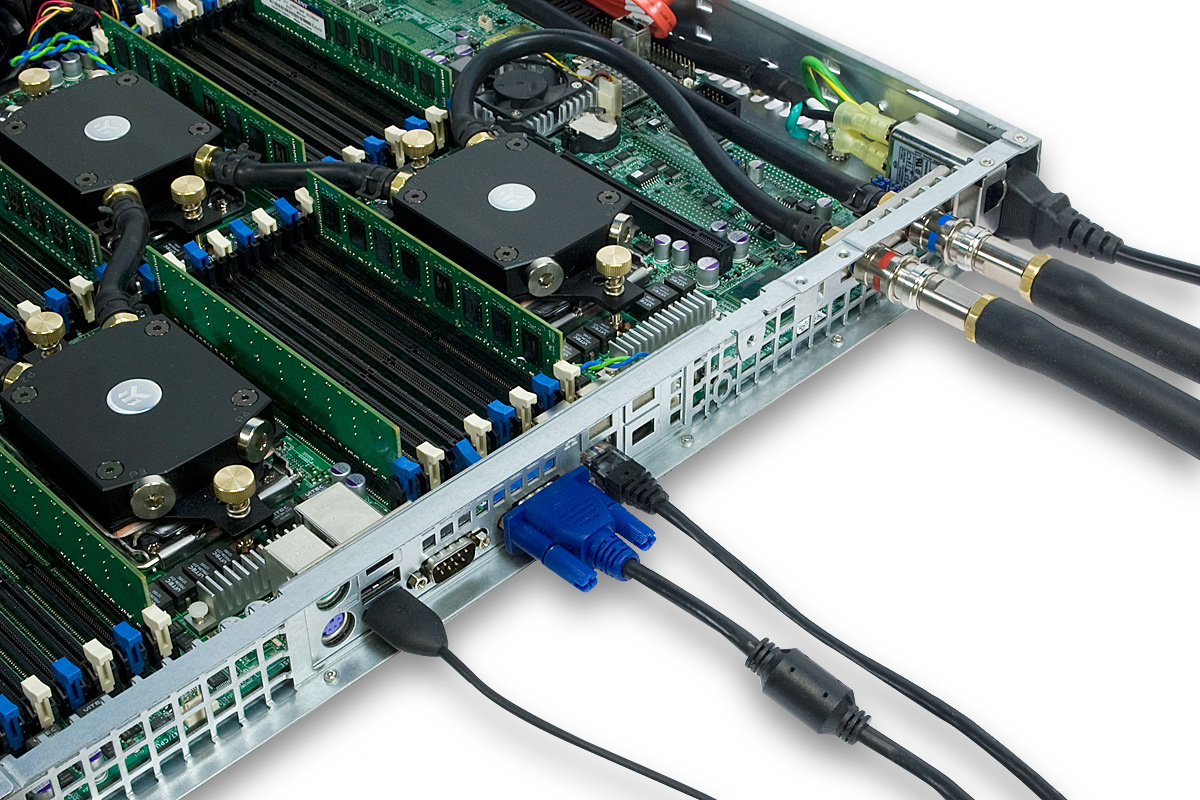

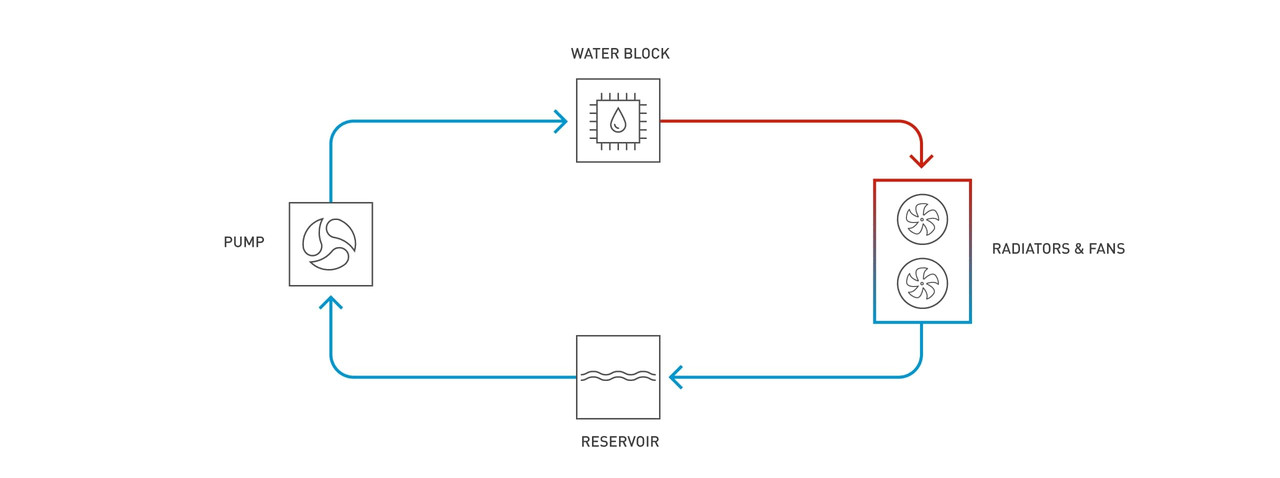

At its core, this type of cooling relies on directly taking up heat from the chips by utilizing purposefully designed water blocks. To achieve the best heat transfer to the coolant, many micro channels need to be either cut or skived into the surface of the cold plate. But a delicate balance needs to be maintained. Too dense of an array can significantly impact the hydraulic resistance and lower the overall cooling potential of the water block. For over 15 years, EK has been one of the leading manufacturers of such high heat flux water blocks while always striving to improve on its designs.

The warmed-up coolant, which can reach 50-60°C (122-140°F), is pumped through a series of nonpermeable tubing (usually made of EPDM rubber) into CDUs that act as collectors for the warm coolant. Connection to the CDU is usually achieved using dripless, mess-free, quick disconnect couplings (QDC) for easier servicing and upgrades, minimizing downtime. On a smaller scale, such industrial-grade QDCs and CDUs are used in our Fluid Works workstations.

The coolant can then be pumped using bigger diameter pipes to the cooling units of the facility. Due to the high temperature difference between the coolant and air, facilities can often rely on a large amount of free cooling by using dry liquid-to-air heat exchangers, achieving great savings in electricity and water.

Direct-to-chip liquid cooling with in-rack heat rejection

This form of cooling is very similar to the previous method with the CDUs. It still uses customized water blocks for heat uptake from the microprocessors, but instead of pumping the warm coolant into the facility’s network, it relies on its own pumps, in-rack chillers, and radiators for the final heat rejection task.

Direct-to-chip liquid cooling with chassis-contained heat rejection

The EK Fluid Works X7000-RM falls perfectly into this category. It features up to 7 liquid cooled GPUs, along with copious amounts of RAM, and a high core count AMD Epyc 7002/7003 CPU. All that hardware, plus the radiators, pumps, and redundant power supplies are contained within a 5U chassis. By utilizing DLC, considerably higher compute density can be achieved and due to it being fully contained, it is an excellent candidate for retrofitting legacy data centers.

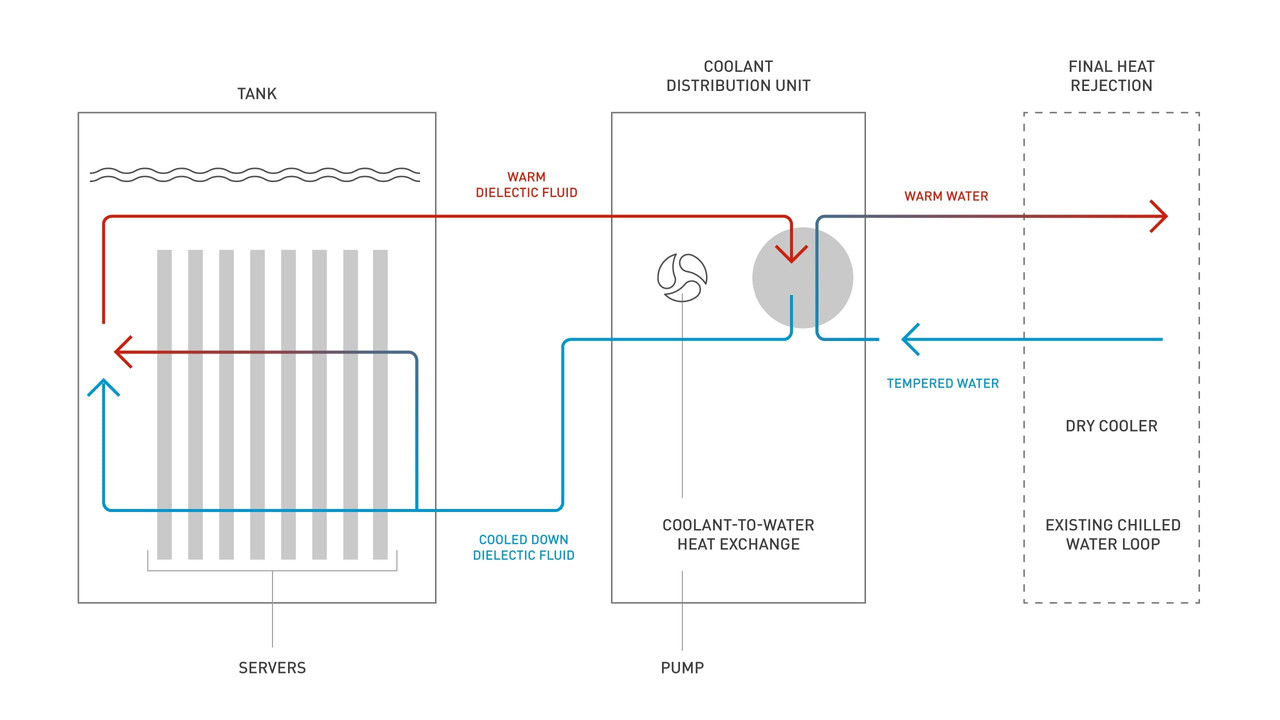

Immersion liquid cooling

A more recent method of taming tens of KW of power is immersion liquid cooling. Instead of circulating and containing the coolant inside sealed systems of water blocks and tubes, the entire server is immersed in nonconductive coolant in large immersion baths or tanks. This coolant is specially engineered to be noncorrosive, nonconductive, inert, long lasting, and with minimal or no toxicity. It can also be single or dual phase, which alters the way it removes heat from the components. Final heat rejection is done in a similar fashion to DCU reliant DLC systems. We will explore this subject in more detail in a future blog, so keep an eye out for it in our newsletters!

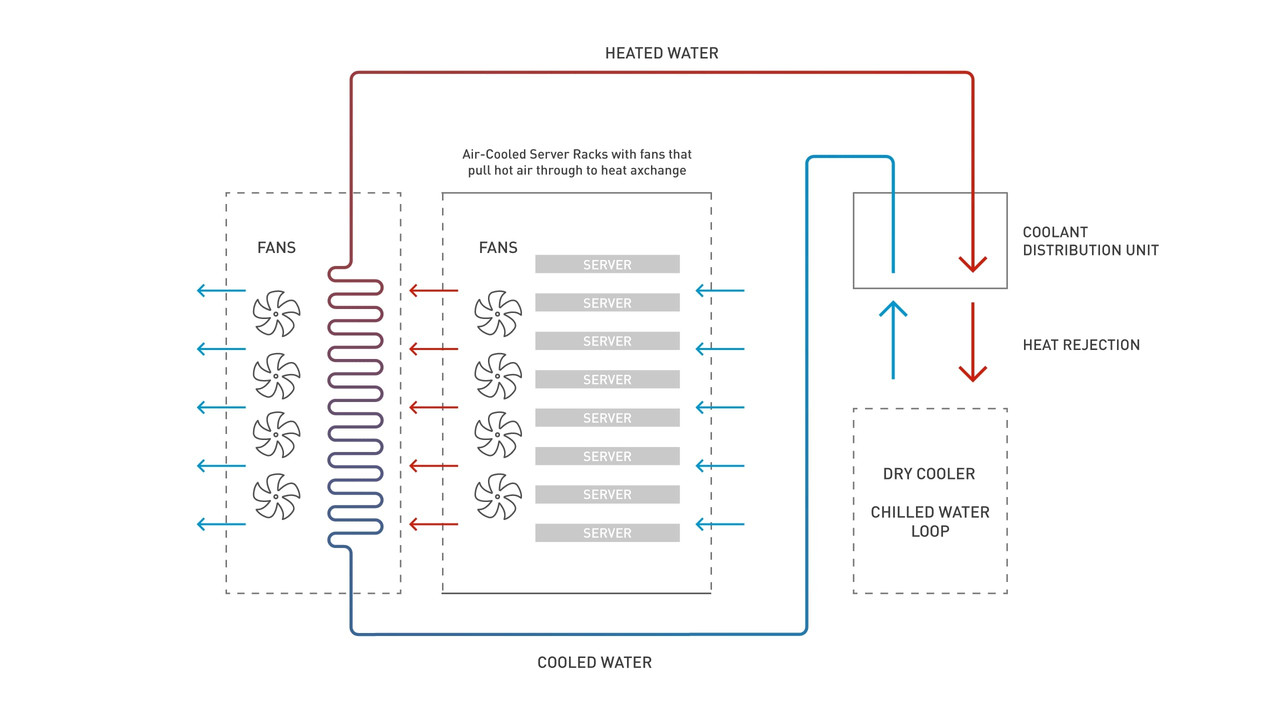

Hybrid rear door air-to-liquid heat exchangers

It is a crossover solution that can be used to boost the existing CRAC system by essentially bolting on big radiators with fans to the back of the server cabinet. It can effectively cool down most of the heated air produced by the IT equipment, but it requires some modification to be made to the server room.

It still doesn’t solve the issue of air being an inefficient cooling medium thus limiting the total rack power by a considerable margin compared to full-blooded liquid cooling solutions.

The Environmental and Economic Impact of Liquid-cooling

PUE is usually used when roughly comparing the power efficiency of two data centers with different cooling solutions. This industry standard, although well established, has its flaws and shortcomings. Two main issues include the treatment of server chassis fans as part of the IT load and not considering the power efficiency gains obtained from processors operating at lower temperatures. These two alone can account for 10-30% of the “IT load,” further concealing the inefficiencies of air-cooling. Despite advances in free cooling technology, relying only on air-based solutions to maintain adequate operating temperatures can have a significant effect on the OPEX. The price of electricity per kW/h spent is rising universally around the globe.

And some areas of the world with exceptionally high electricity prices might prohibit the operation of data centers or HPC facilities. Liquid-cooling provides an actionable economical solution and enables expansion into new markets due to its exceptional free cooling potential. The utilization of renewable energy sources and the reuse of waste heat from the data center can minimize the data center’s environmental impact. Yet, numerous countries still rely heavily on fossil fuels for energy generation. In contrast, improved power efficiency alone can profoundly impact the amount of CO2 being released and enhance the quality of air overall.

Conclusion

Industries hunger for more computational power in smaller forms while still trying to improve the energy efficiency of high-performance computing facilities. This push for “greener” technology and solutions is essentially forcing the hand of data center engineers to improve future cooling systems, and, for now, that future is liquid!