Server Power in Your Workstation

1/28/2022 12:09 PM

Workstations weren’t always pushing the boundaries of science and discovery. Before the advancement of artificial intelligence, and its sub categories of machine learning and deep learning, the average workstation was expected to complete relatively simple tasks like office and audio-visual work. However, the requirements for that type of work are absolutely dwarfed by those necessary to deliver satisfactory results for AI.

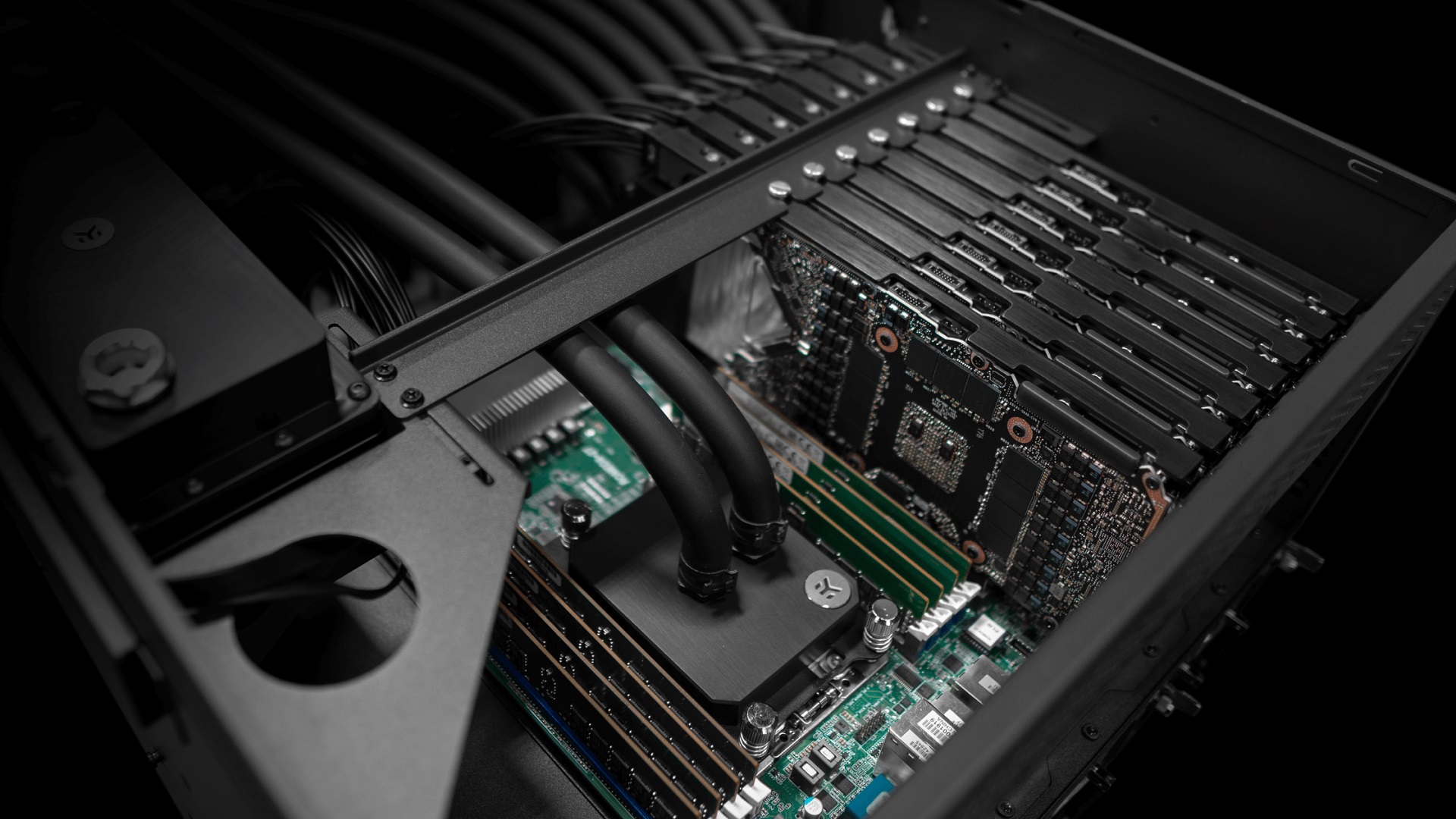

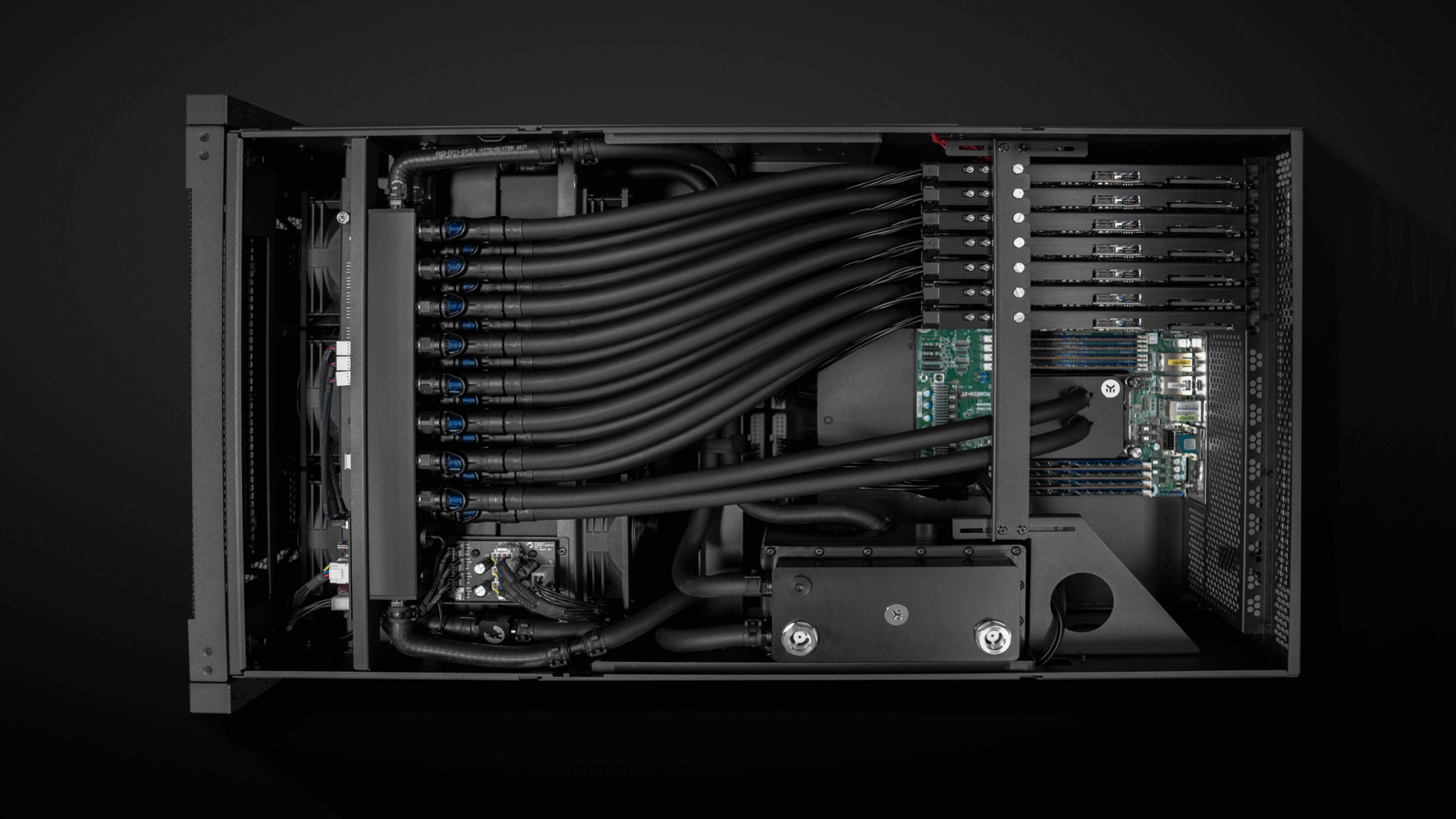

Unfortunately, AI demands much more from hardware than what a normal workstation is capable of delivering. As a result, the need arose for advanced colocation facilities and dedicated data centers. Now rows upon rows of servers toil away for days on end to deliver the results in a timely manner. The only downside is that they are expensive. Thankfully, with the advancement of microprocessor architecture and cooling technology, you can harness a server’s power inside a more reasonably priced and convenient professional workstation.

There are more advancements to be excited about. Software has also kept pace with the hardware and now we have a plethora of computation suites, each fully leveraging the various AI specialized cores in GPUs and some CPUs. Yet, the sheer volume of data that needs to be processed quickly requires a considerable boost in compute density to make an AI workstation viable.

The building blocks of an AI workstation

- CPU(s) – It’s important to decide how many CPU cores and the processing power you need.

- Motherboard – The desired CPU will pretty much dictate your motherboard choice, but it will also define the connectivity and expandability of your workstation.

- GPUs – As the kings of AI, GPUs will be doing the bulk of the work. Their capabilities and power will affect how quickly your tasks/projects will be completed.

- RAM – It’s vital to get the right amount of RAM for your current and future needs.

- Storage – A good mix of snappy SSDs and large capacity HDDs is a wise choice.

- The cooling system – There are two choices, air cooling vs liquid. We will cover the pros and cons in detail, but you can check out our previous blog on why liquid cooling is the superior choice.

- PSU, chassis, UPS and peripherals – supporting systems are of lesser importance, but not to be taken lightly.

The right CPU for an AI workstation

AMD vs Intel. This is the first hurdle to overcome. Yes, there are some ARM-based solutions out there, but they are much harder to come by than these two.

Not too long ago, the choice would have been obvious – Intel, right? But AMD has come a long way and is a real threat and competition for Intel. That is great news for the consumer. For machine learning purposes, if you are not building a single GPU system, you can pretty much forget about the more affordable option. We need to go for the big guns from the get-go! The i7/i9, the Xeons, Ryzen 9, Threadrippers, and Epycs.

To handle the various general tasks, data preparation, and sub processes, you will want to have a solid number of cores. But what we want more is the higher-than-average number of PCIe lanes and RAM capacity. The PCIe lanes are required to support multiple GPUs with enough bandwidth as to not bottleneck their performance. And the larger RAM pool (sometimes with ECC capabilities) will serve us well for very large data sets.

In the Intel corner, we are pretty much limited to their higher-end components, such as the Icy Lake Core i9 X series, Xeon W3300, and Xeon Scalable CPUs. The number of PCIe lanes is often over 40, which will ensure that the most common GPU configurations have at least 8 PCIe lanes per GPU.

AMD, on the other hand, has really opened up the flood gates. Their EPYC CPUs come with as much as 64/128 cores/threads and an impressive 128 PCIe lanes. That is enough bandwidth potential to satisfy up to 8 GPUs. A true behemoth. The one downside, however, is the more limited RAM capacity, which taps out at 512GB of ECC/REG DDR4.

So, if you need more GPUs that have less than 256GB of combined VRAM, go with AMD. But for higher capacity GPUs, you might be better off with the Intel option despite the lower PCIe lane count.

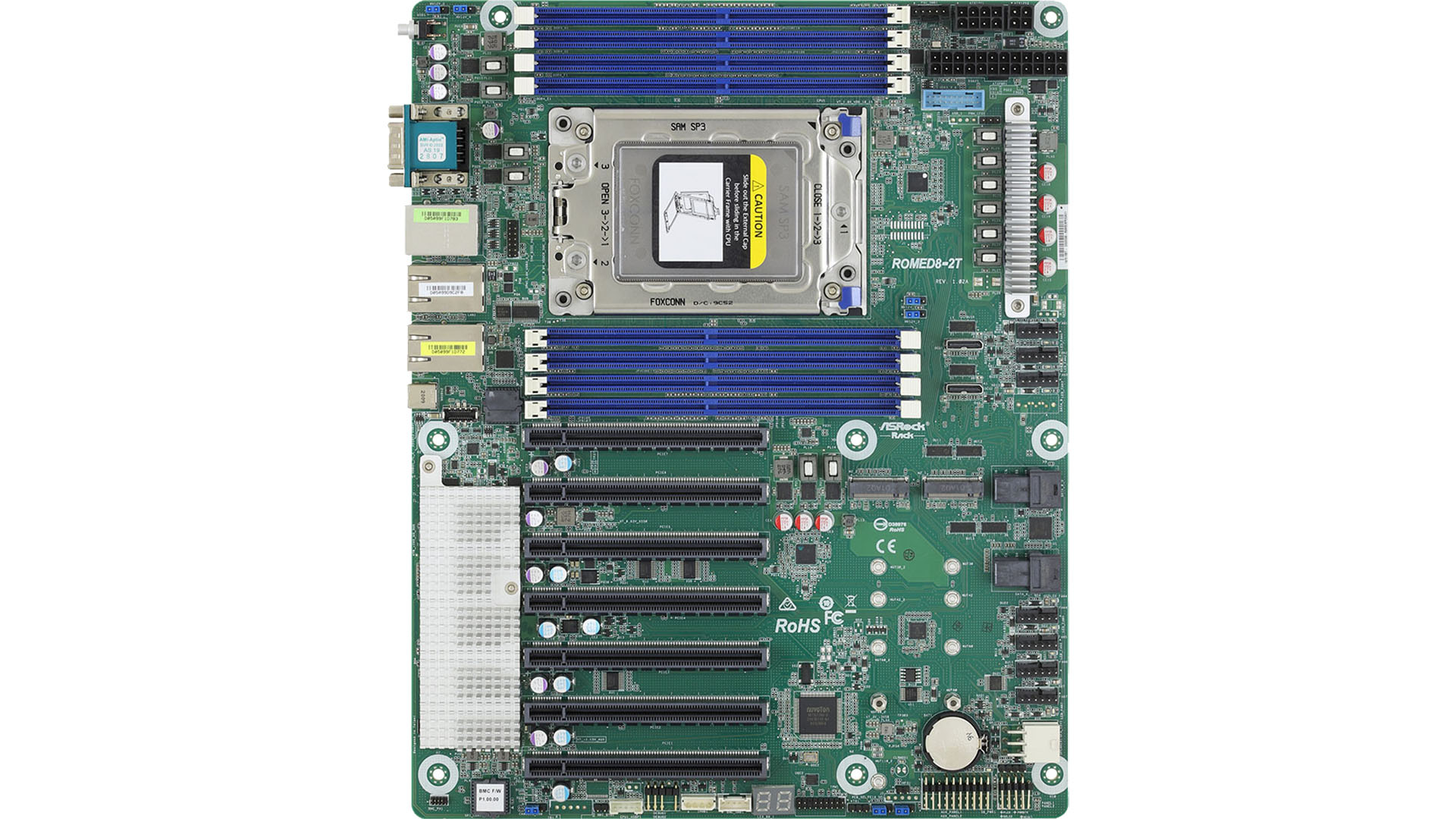

A motherboard for all of your machine learning needs

Depending on which CPU you went with, a motherboard with the appropriate socket will follow suite. It doesn’t matter if the CPU has all the PCIe lanes in the world if the motherboard doesn’t have enough PCIe x16 slots for the GPUs. So, make sure that you have enough slots for the number of GPUs you intend to run with.

You may have noticed that most motherboards come with 4 such slots. And there is a very good reason behind it. Most powerful GPUs come with at least a 2-slot air cooler attached to them. Proper spacing is required to accommodate at least some airflow and prevent overheating. However, this limits the total number of GPUs per system to only 4. A way around this limitation is the introduction of specialized motherboards with up to 7 PCIe x16 slots and by utilizing liquid cooling to shrink down the size of a GPU to just a single slot. While it may seem impossible, the details of this solution will be outlined in the next blog.

Lastly, let’s not forget about connectivity, one of the primary roles of the motherboard. You will want the motherboard to stich all the parts together and make them cooperate flawlessly. Having the appropriate amount of RAM and M.2 slots, SATA, SAS and U.2 connectors is vital for expandability and to be able to have enough storage available for your data sets.

These tips only scratch the surface of how to harness the power of a server in a workstation. The ongoing series will divulge more professional insight in the process. Be sure to read our future articles to learn more about the best GPU options for your AI workflow.